Deflating the universe

Tagged with: [ compression ] [ deflate ] [ gzip ] [ huffman ]

Compression is used all around us every single day. You (or your girlfriend/wife most likely) folds your clothes nicely so they all fit in your closet. When recycling, you flatten the cardboard milkboxes so they take up less space and you probably even text your friends with messages like: “hi, how r u? w r u? cya, xxx”. Stuff like that.

In the computer world, compression probably plays even a more important factor. When compressing data, it take less space and thus less time to send it over to somebody else through internet. Years ago, you bought a 100MB harddrive and use special software to increase the capacity to (up to) 200MB (doublespace, stackspace for those who can remember). It all uses compression one way or the other.

Some compression methods can compress and decompress in such a way that the decoded output is exactly the same as the original. For instance, gzip and deflate. It’s called lossless compression since no data is lost during the compression/decompression. Other compression methods don’t. For instance, JPEG or MP3 compression creates smaller copies of the original but they can never be decompressed back to the original format. Colors that are very similar (but not quite the same) are converted into 1 single color during JPEG compression. Or inaudible sounds are removed from an audio file when compressing to an MP3. With JPEG or MP3’s, for most people this is not an issue. The images are perfect enough for normal usage, and the audio quality of mp3’s are also good enough for the average use (although I know a few persons who want to kill me for saying this :)).

In this article I will talk a bit about the deflate compression method. A compression method used throughout the whole internet and probably the most used compression algorithm today.

Deflating GZIP

First about gzip. GZIP itself is a file format, not a compression method. It’s used on many platforms and has many purposes. It consists of a binary header and afterwards the actual compressed data. The compression used in GZIP is the deflate compression algorithm so when talking about gzip, you are actually talking about deflate but with some extra stuff added to it.

More on the gzip file format can be found on http://www.gzip.org/zlib/rfc-gzip.html#file-format.

Deflate Deflate is used in many things. For instance: PNG files are compressed with it and the webserver

you just received this page from, compresses this page prior to sending it to you (the “content-encoding: gzip”). It’s also part of gzip as said which is used as de facto compression method for files on linux (although bzip2 can compress even better).

The deflate algorithm can be found on http://tools.ietf.org/html/rfc1951, but it’s a little complicated when you never have dealt with it. It basically a mixture of two different compression techniques merged into one:

- duplicate string elimination

- compression though huffman coding (trees)

Duplicate string elimination, Duplicate string elimination

By duplicate string elimination we mean that when you have duplicate strings in the code (for instance:

AAAAA), you can also specify (5xA), which tells the decompression it has to print 5x the A. Sounds simple

enough and this is a very good elimination when compression files like html files, source codes or human text

since a lot of repetitions are bound to happen in those files.

Duplication elimination removes the duplicate content and replaces it with a sort of internal command: copy L times the bytes found D positions back. (where L stands for length, and D stands for distance)

An example:

<html>

<title>Test</title>

</html>There are 2 repetitions here: html> and title>. The software can detect this and tells on the second line

to use a length of 6 bytes in a distance of 12 bytes.

12 bytes back, we are on the ‘t’ of

The [6;12] part is probably encoded in less space (say: 1 byte), so in the end we just have saved 5 bytes by eliminating strings (the same goes for the duplicate ‘html>’ string). How this is incorporated into deflate we will see later on.

Huffman trees

Deflate uses the huffman coding for compression. It basically boils down to this: the characters most used in the original file, gets the least amount of bits inside the compressed file. How many bits a certain character gets, is stored in a lookup table called the alphabet (or huffman tree, the same thing). Since each file is different, we use different alphabets for different files (we could even have different alphabets in the same file!).

So, before we can do any decoding, we must read the alphabet (there are actually 2 of them). To make matters a bit more complex (we are dealing with a serious stuff here of course), the alphabet itself is stored compressed, using ANOTHER alphabet. This alphabet is luckely known since it’s always the same alphabet (and must be hardcoded in the (de)compression routines).

Let’s start with a simple example:

Suppose we have to following alphabet, taken from the input string ‘DEADBEEFFE’ (how we have made this alphabet is not important for now):

D 2 bits: 01 A 4 bits: 0010 F 4 bits: 0001 B 4 bits: 0011 E 1 bit: 1

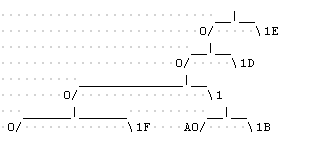

This alphabet consists out of 5 characters (from A to F, without the C). As you can see, the E is used the most time, and thus has gotten the least amount of bits. the D comes in second (with 2 bits). The reason we don’t use 3 bits for the ‘F’ for instance, is that we get an overlap. Suppose the F would be encoded like ‘001’. How would we know if we either have found an ‘F’ OR if it’s part of the A or B (because both start with ‘001’ as well. The encoder must make sure the bits don’t “overlap” like this and the best way is to see the alphabet as a tree-structure (hence: an huffman tree)

As long as a node has a branch, it cannot contain a character. It’s up to the encoder to make sure the alphabet is created correctly.

Now, with this alphabet the encoding and decoding of the string is easy. For encoding: read a byte, seek it in the tree while adding the bits to the output. For decoding: get bits from the compressed file, follow it down the tree until you hit a character and there place that character in the output.

With this tree, you can compress the ‘DEADBEEFFE’ string into this

D: 01 E: 1 A: 0010 D: 01 B: 0011 E: 1 E: 1 F: 0001 F: 0001 E: 1

Which gives use the bit string of:

01 1 0010 01 0011 1 1 0001 0001 1

and grouped into bytes:

0110 0100 | 1001 1110 | 0010 0011

6 4 9 E 2 3 (hex code)

We just compressed a 10 byte string into 3 bytes ! The string “DEADBEEFFE” is the same as 0x64 0x9E and 0x23!

Decompressing is also relative simple. Just read a bit from the bitstream and follow down the tree until you hit a character (or until the tree ends, in that case you have a incorrect file!)

First bit: 0

since bit 0 on the top of the tree has 2 branches, read the next bit

next read: 1

That branch does not have any other branches and just contains the value D. Output the ‘D’ and start over:

first bit: 1

There is one possibility: the E. output the ‘E’ and start over..

at the end, we get our string DEADBEEFFE back!

Compression is not really that hard after all! :)

Deflating blocks

The deflate format compresses data into blocks. Each block has it’s own method of compression. There are 3 different ones, so your decompressor needs to be ready for all three of them. Each block has a 3 bit header which tells you if it’s the last block (most of the time, there is only one block inside the file, so it’s automatically the last block. That got me debugging the thing for a while until I figured that one out). The next 2 bits tells you which compression method is used. Allthough a maximum of 4 are possible, 1 is reserved so only 3 are left:

- This block is literal data. It’s not compressed

- This block is compressed with a STATIC huffman tree.

- This block is compressed with a DYNAMIC huffman tree.

- reserved. You should not encounter this.

Method 1 is the easiest to decompress. The next 2 bytes holds the length of the uncompressed data so the input can be passed directly to the output. Useful for encoding blocks that are already encoded.

Method 4 should never happen. If this is the case, you might have stumbled onto either a new kind of deflate format or an error in the file. Either way, you cannot decode it so return with an error.

Methods 2 and 3 are the actual meat of the compression. The huffman coding.

The only difference between method 2 and 3 is that method 2 uses a static huffman tree. This means the alphabets that are needed to decode the file are not present in the encoded file, but a general one is used. This one can be found in the RFC. This saves a lot of room since we don’t have to incorporate the alphabet, but it might give us a less than optimal compression. It’s up to the compressor to decide whether or not it’s viable to compress with the fixed tree or not.

The dynamic huffman compression means we first have to get the alphabets that are used to compress the block. These alphabets are stored before the data BUT are in itself encoded (to save room obviously). The alphabet used to encode this data is fixed (but is not the same as the one used for fixed huffman compression) and can be found in the RFC (it’s an easy one actually).

Now.. As seen above, an alphabet can have a number nodes. A binary file consists of bytes ranging from 0 to 255, so we need at least 256 nodes in our tree. However, here are more: 288 in total to be exactly. This is because the first alphabet is a mixture of 2 different alphabets: The literal alphabet and a length alphabet (we look at that later). The first 256 characters are literals. The rest from the characters are lenghts.

When your decompressor decodes a character between 0 and 255, it means that this is a literal character from the original file. You can place this directly into the output buffer. A character 256 means this is the end of the block. the block is done when you hit this character. The characters 286 and 287 are reserved and you should not encounter them. All characters between 257 and 285 are “length” characters and here is where the “string duplication” comes into play.

Decompressing lengths and distances

When character 257 or higher is found, it means the character is not a literal but a “length”. Namely the length of the string we need to duplicate. character 257 means the length is 3 characters, 258 means the length is 4 characters. From character 265 and up things get special again. This character means: use the length of 11 PLUS the next bit. This bit can either be 0 or 1, and you have to add it to the length. So character 265 + the next bit 0 means a length of 11, the character 265 + next bit 1 means use length 12.

Further down the line the bits we need to read extra gets higher and thus the length range gets higher as well: character 282 has to read 5 extra bits and can createa a length of between 163 and 194.

These distances can be found on http://www.w3.org/Graphics/PNG/RFC-1951#codes

Notice that the maximum length can be 258 bytes (when using character 285). This means, when the compressor has to duplicate a string of 300 bytes, it must either use character 285 (no extra bits) for the first 258 bytes and character273 plus 3 ‘1’-bits so it knows it has to use length 42, OR it can use the codes for 2x 150, or 3x 100, etc.. depending on what the compressor thinks (or knows) is shorter, that one will be used).

We now know the length we need to duplicate, not WHERE the string is we need to duplicate. This can be found in the other alphabet we have decoded earlier called the distance alphabet. This is also a fixed alphabet when we use the fixed huffman encoding.

The distance alphabet works the same way as the length alphabet. There are 32 characters (ranging from 0 to 29 plus 2 reserved entries). Character 0 means the distance is 1 byte. Character 3 means the distance is 4 bytes. From character 4 to 29, we have to read more bits again to decide how big the distance is. For instance, character 26 is followed by 12 bytes and it can hold a distance from 8193 to 12288. The maximum distance we can have is 32768 bytes back. Which means 2 things:

- Make sure you keep a record of the last 32768 bytes outputted (since you might have to read them back). 2. Duplicating contents further away than 32768 bytes in the original fill will not be compressed by string duplication elimination. There cannot be 2 “distances” right after each other, just like you can with the “length” characters.

Know your alphabet

It’s not necessary for the encoder to send over ALL characters in an alphabet. When some characters are not used the node is left empty and used by something else. Either way, the alphabet gets smaller. So it can happen that the literal alphabet is only 16 chars long instead of 256. This happens when you have only 16 distinct character in your file. Same goes for the lenght and distance alphabets. This all again in the sake of sending as few bits over as possible. The alphabets are prefixed with some info about how many characters (literals, lenghts, distances etc) are inside the alphabets.

Encoding through deflate means knowing how to find the correct alphabet. This can be done statistically (the ‘E’ is more used then the ‘Q’ in english, so the ‘E’ gets less bits), or by actually reading the file and creating percentages for all characters. Since the latter will take time but creates a better compression, you can specify the amount of work the encoder will do when creating the alphabets. This is the ‘-1’ to ‘-9’ parameter you sometimes see when using gzip (ie: gzip -9 myfile.txt). The -9 means: do the most amount of work to get the best alphabet.

As you can deduce: the amount of work spend on ENCODING a file, does not necessarily means that the amount of work DECODING the file will also be higher. It’s still an alphabet, only better equiped for the current job.

Conclusion

Compression algorithms are hard in general. The main effort is to save as much space as possible and normally a tradeoff will be that more has to be done by the processor to compute the original values. There are a lot of libraries out there capable of handling gzip/deflate data like libz, and even php has it’s gzip_decode() functionality.

The reason I’ve dived into deflate / huffman coding was not only for fun and gaining knowledge, but also for my own hobby OS project that can load a gzipped kernel. By reading the RFC’s for gzip and deflate, you gain a lot of information but I found that the best way of getting to KNOW huffman and deflate is by viewing the source of David Madore www.madore.org/~david/programs/selfrep/selfunc.c so a big thanks goes out to him.

Please, since I do not know everything about deflate/huffman yet, chances are somebody will probably spot an error or 2. Please mail me or leave a comment.

Interessting links

- RFC for Deflate algorithm * Huffman encoding * GZIP format * www.madore.org/~david/programs/selfrep/selfunc.c