Back to basics: virtual memory

Tagged with: [ b2b ] [ memory ] [ virtual ]

Memory is something (almost) no computer can do without. In todays world the saying goes: the more memory the better. But the way computers uses memory is very different than they did only a few decades ago and “more memory” does not always equals better performance. A small introduction and history in memory usage.

Remember the Bill Gates quote about “640kb should be enough for everybody” (which wasn’t his by the way). In that day and age, processors and address buses couldn’t cope with the amounts of memory we have today. They used a different kind of system on accessing memory which had his pros and cons (mostly cons though). It was a very obscured way of dealing with memory called segmentation and it created lots of overlap and difficulties since each memory location could be accessed in many different ways. This could be very confusing and today it is still the way your (386 compatible) processor will start up. However, modern OS’es will only do the least amount of work inside this “segmented memory model” before jumping to a more friendly (but still confusing) way of accessing memory called “paged virtual memory”.

The nice thing about virtual memory is that we don’t need to care about the actual layout. From an applications point of view, it’s a large piece of memory which we can use. Every program that you will run will have an address-space of 4GB. If it wants to allocate 4GB of memory, it’s basically free to do so. The fun part is that EVERY program can do this even when you don’t have that much memory inside your system. This 4GB address space is completely isolated from the address space from other processes. This means that location 123456 for process A can hold a different value than location 123456 for process B. However, from the operating point of view, more work has to be done. (note that you can increase the 4GB limit even on 32bit systems, so you can even use more memory if needed).

Confusing? Maybe, but let’s look at it differently from the view of an application (or process to be exact).

When a process starts it will need some memory. Some memory must be allocated that holds the actual program code, some

memory will be allocated for variables (data) etc. And most probably your program will need to allocate memory at

runtime, for instance, room to store input from files or users. Suppose we want to allocate a memory block of 10

megabyte. We cannot do this ourselves but we must ask the operating system to do that for us through a malloc() call.

When this call succeeds, it will give you a memory address where 10 megabyte of space is reserved. Now the thing is that

this block of memory isn’t really occupied until you actually use it. The OS just reserved it for you. And as with any

system that works with reservations, it can overbook the actual space left (just like hotels, airlines etc). This is why

it’s possible to have your programs use more (virtual) memory than there is available on your system.

But what happens when your applications actually start using that memory?

The operating system will be informed when a “new” block of memory is being accessed. A block is normally 4Kb in size.

It can actually make room inside your PHYSICAL memory for that block and once its done the application can use it. The

triggering of the operating system is automatically done through a system called a “page fault exception”.

Swapping

So I just told that it’s possible to have more memory allocated than physically is available because memory is actually allocated on demand. But what would happen when all your applications demand the use of their reserved memory? That will never fit inside your physical memory!

This is true but not a problem. Your operating system can decide to offload blocks of (physical) memory to disk. This is called “swapping”. The OS is smart enough to offload blocks of memory that isn’t used very much. There are many techniques for it and every OS does it differently. Point is, an OS can decide to offload memory to disk so it has more room for “newer” data.

Note that this is all transparent for the applications. They don’t know if their memory is residing in physical memory or if it’s swapped onto disk. The virtual memory still looks the same and it can read memory locations that are swapped just as find as non-swapped locations. But when your application hit a swapped block, your OS will be triggered again (again, through a pagefault exception) which can reload the memory block back from disk into memory again. The same principle goes: when there is no room to load the actual block, it will offload other blocks again to make room. Once it’s done, it will return back to the program as nothing has happened and it can continue reading the memory.

As you can imagine, swapping takes a lot of time. Reading a block from disk is much more work than reading a block from memory. Even though your application cannot see the difference, your operating system has to do lots of extra work involving slow hard-disks so the performance of your system will be poor when you need to swap (a lot). You CAN use your system without swap-space, but that would mean that when your physical memory is full, the OS *MUST* shutdown programs in order to gain some room for others.

Paging

In order for this all to work, there must be a mapping between a processes virtual memory and the actual physical memory. Your OS (and processor) can handle this automatically through something called the page table. It’s a big index of maximum 1MB in size which holds all the mappings between the virtual and physical memory. When your application reads the memory on (virtual) location 0x123456, the processor first must lookup inside the page table to see where location 0x123456 is actually mapped to. This is all done by your processor on a hardware level so your application doesn’t have to do anything or even know about it. From your application point of view it wants to read location 0x123456 and that’s it. Once the processor has read the pagetable, it can look up the correct physical address (for instance: 0x10000) and will read the block of memory from that point. Because this is all done inside the hardware itself, it’s a very fast process and doesn’t slow down your system.

Now every process has got their own page table. This means that 0x123456 of process A can actually point to another physical location as 0x123456 in process B. It all depends on what your OS has used in the pagetable. This also means that even though the virtual memory looks linear, it doesn’t have to be physically:

0x10000 -> 0x5000

0x11000 -> 0x6000

0x12000 -> 0x3000

0x13000 -> 0x7000

In this example, virtual address 0x10000 to 0x14000 is mapped physically, but not linear. addresses 0x12000 to 0x12FFF are actually residing in a lower part of physical memory than the rest. This makes it possible for your OS to actually use the most efficient way of mapping without getting too fragmented.

There is a bit of extra info inside the page tables though. Your OS can define if a certain memory block is read only, or writable. Which type of process can read (for instance, kernel only data should only be writable from the kernel, not applications) and also if certain memory blocks are executable or not. These are all part of making a multitasking OS secure and if something bad happens in one process, it doesn’t affect other processes so the operating system can shutdown the bad process and continue if nothing happens. Virtual memory is part of what makes operating systems stable these days.

Because of the page-table, it’s possible to use the same code or data between many processes. This is exactly what shared objects in linux (or DLL’s in window) do. They can be used by multiple programs, but they are only loaded once into physical memory. When a program needs them, the pagetable will actually point to the physical memory where this DLL is loaded so it can be accessed by many programs.

Copy on write

Virtual memory actually has a very nifty trick up it’s sleeve to gain lot of speed. As said, it’s possible that 2 processes share the same physical location (however, because of paging they can have different virtual addresses). This is cool since instead of needing to use 2 times 4kb of memory, only 1 4kb block is needed which is a good way to save memory.

But what would happen if process A wants to modify a block of memory that is also shared with process B? If process A is allowed to change that, process B would see the change, which means the processes aren’t isolated anymore. What actually happens, is when we write into a shared block (and we are actually allowed to do that as a process), a page fault exception will be triggered (page fault exceptions will be triggered a lot, so i’m not sure if exception is the correct word here actually :) ). At that point, the OS can duplicate the block for process B. It then updates the page tables for process B so it points to the newly duplicated block and returns. Now process A can proceed with the update and since the block is separated from B, and process B will not see the change.

This mechanism is called copy-on-write and it saves a lot of time and memory. Suppose we have 1MB of shared data. As long as nobody changed that data, we do not need to physically copy over that data which saves time the and memory. When somebody changes 1 byte, only the page (or 4KB block) consisting that byte will be duplicated. The rest still will be shared. This technique will be much faster and efficient as long as you don’t completely change the original data, which you won’t in most cases.

If you are dealing with PHP, you might have heard about copy-on-write. It’s a technique also used to pass variables to other functions or copying data. Suppose we have a very long (1MB) string inside variable $a and we copy this to variable $b, all it does is create a variable $b that points to $a which a “copy-on-write flag set”. Only when we are about to change either variable $a or $b, then an actual duplicate will be made so both $a and $b are different.

Virtual memory from application point of view

Even though we don’t really need to deal with all the virtual-to-physical conversions in our daily work, sometimes it can come in handy. For instance: what does it say when a program uses up 10% of memory? Does it mean it uses 10% of physical memory? Is everything inside physical memory or has the OS swapped some of that memory out to disk? Turns out that finding the actual memory usage is not as easy..

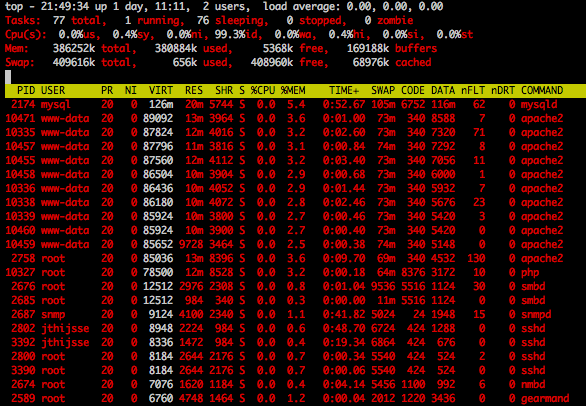

As an example, lets take a look at some output from the linux command “top”:

Here are a few important fields:

VIRT

The amount of virtual memory that your application *CAN* access. This is not the amount of memory the application

actually uses. Don’t get confused and don’t make the mistake of summing all those columns up in order to get the amount

of memory used inside your system. You can see many large values here but in the end your system might not use that

much. This is especially the case when dealing with multi-process applications like apache for instance where lots of

data is shared between processes.

RES

The physical memory that a process is using. This does not include swap space (note that the SWAP column of top does not

display the swap space that is used. Don’t use it!). However, some of this physical memory could be shared by other

processes. So it still doesn’t give you a clear overview of what is actually used.

SHR

Amount of memory that COULD be shared. This memory is used by this process, but can also be used by others. For

instance, things like shared objects etc. It however does not tell you exactly how much of that memory is shared by

others.

So in all fairness, finding the exact amount of memory a process uses is very difficult. If you really want to find out more about memory usage, use dedicated programs like pmap to find out but remember, virtual and physical are different things and all can (and will) be shared and/or swapped. Don’t make assumptions based on statistics you don’t understand.

Conclusion

Even though I tried to explain it as easy as possible, virtual memory management is one of the hardest parts to get right when dealing with or creating operating systems. The virtual memory manager (VMM) must be capable of making judgement calls about swapping, allocating data efficiently, copy-on-write actions etc. The basics of virtual memory are in fact pretty easy and even though we must go through some hassle as an operating system developer, applications developers should probably never have to deal with the internals of the VMM. From their point of view, malloc() is the only thing that is needed, and it-just-works… But maybe now you have a little bit of understanding what actually go on inside the OS and processor when dealing with memory.